Artificial intelligence is no longer stretching data centre infrastructure at the margins. It is reshaping the basic assumptions that underpin how facilities are designed, powered and cooled. As GPU clusters grow larger and more power dense, the challenge facing operators is no longer whether they can deploy AI at scale, but whether the physical infrastructure can keep pace.

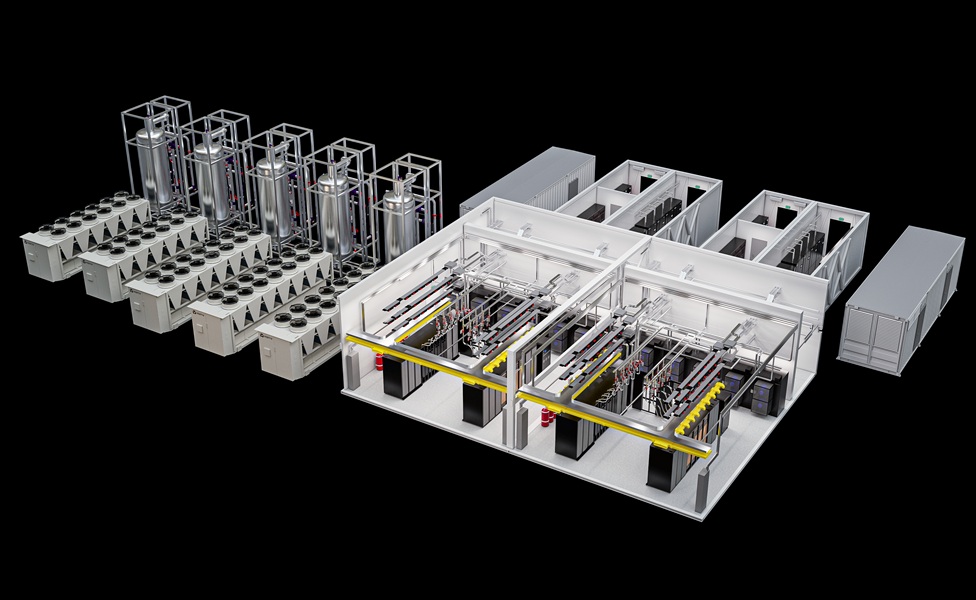

That tension sits behind Vertiv’s latest move to expand its modular liquid cooling infrastructure portfolio. The company has introduced new configurations of its MegaMod HDX platform, aimed at supporting the extreme power densities associated with AI and high performance computing workloads across North America and EMEA. While the announcement centres on a specific product, the broader story is about how AI is accelerating a shift towards prefabricated, hybrid cooling architectures as operators race to deploy capacity faster than traditional build cycles allow.

Vertiv says the new MegaMod HDX configurations are engineered to support rack densities from 50 kW to more than 100 kW per rack, with total power capacity scaling up to 10 MW. Those figures reflect the reality of modern AI environments, where traditional air cooling alone is increasingly insufficient, and where deployment speed has become a strategic differentiator.

When air cooling is no longer enough

AI workloads, particularly those driven by advanced GPUs, generate heat levels that conventional data centre designs were never intended to handle. As a result, operators are turning to liquid cooling, not as an experimental option, but as a necessity. The MegaMod HDX platform integrates direct to chip liquid cooling with air cooled architectures, creating a hybrid approach that aims to balance efficiency, flexibility and operational resilience.

The platform is designed to support pod style AI environments and large GPU clusters, with two main configurations. The compact version has a standard module height, supports up to 13 racks and delivers power capacity of up to 1.25 MW. The larger combo configuration uses an extended height design, accommodates up to 144 racks and scales to 10 MW. Both are intended to allow operators to match infrastructure to workload requirements rather than overbuilding capacity in anticipation of future demand.

This modularity speaks to a wider industry shift. As AI demand remains volatile and investment cycles shorten, data centre operators are increasingly cautious about committing to fixed, monolithic builds. Prefabricated modules offer a way to deploy capacity quickly, standardise design and expand incrementally as workloads grow.

Designing for resilience at AI scale

Beyond raw capacity, resilience has become a defining concern for AI infrastructure. Downtime in GPU dense environments carries a high financial cost, particularly where compute is tightly coupled to revenue generating services. The MegaMod HDX architecture includes distributed redundant power, allowing continuous operation even if a module goes offline.

The system also incorporates a buffer tank thermal backup designed to maintain stable GPU operation during maintenance or load transitions. This reflects the growing recognition that AI infrastructure must be engineered not only for peak performance, but for graceful degradation under stress.

Viktor Petik, senior vice president for infrastructure solutions at Vertiv, said the aim was to move beyond traditional cooling approaches and give organisations the ability to scale high density, liquid cooled environments with confidence. His comments point to a broader recalibration underway in the industry, where reliability and predictability are becoming as important as raw performance.

The prefabricated nature of the platform is also positioned as a way to deliver greater cost certainty. Factory integrated and tested components reduce on site complexity and variability, an increasingly important consideration as data centre builds face skilled labour shortages and rising construction costs.

A sign of where AI infrastructure is heading

While Vertiv’s announcement focuses on a specific set of configurations, it reflects a wider convergence of trends shaping the future of AI infrastructure. Power density is rising faster than many grids can accommodate. Cooling is becoming the limiting factor in where AI can be deployed. And operators are under pressure to move faster than traditional design and build cycles allow.

Modular, hybrid cooled infrastructure is emerging as one response to those pressures. By combining liquid and air cooling within prefabricated designs, vendors are trying to offer a middle ground between performance and practicality. Whether this approach becomes the dominant model remains to be seen, but the direction of travel is clear.

AI is forcing data centres to evolve from static facilities into adaptable platforms, capable of scaling rapidly while managing unprecedented thermal loads. The introduction of higher density modular solutions is less about product innovation and more about acknowledging a simple truth. As AI reshapes the digital economy, it is also redefining the physical limits of the infrastructure that supports it.