For years, artificial intelligence has been discussed in terms of models, data and compute. Increasingly, however, a more prosaic constraint is coming into focus: whether complex, autonomous systems can actually be operated reliably at scale. As AI driven applications generate vast volumes of operational data and behave in less predictable ways, traditional approaches to monitoring and troubleshooting are starting to break down.

That context sits behind the decision by Snowflake to acquire Observe, a move that reflects a broader shift in how enterprises are thinking about observability in an AI-first world. The deal, announced this week, signals that observability is no longer being treated as a standalone IT function, but as a core data and AI problem that sits alongside analytics and application development.

Snowflake says the acquisition will allow it to deliver AI powered observability built on open standards and designed to operate at the scale and economics required by modern enterprises. The company is positioning the move as an extension of its AI Data Cloud, enabling customers to ingest and retain all of their telemetry data, from logs and metrics to traces, without the cost trade-offs that have historically forced teams to sample or discard information.

When AI makes reliability a business issue

As enterprises build increasingly complex AI agents and data applications, operational reliability is becoming less about system uptime and more about business continuity. Sridhar Ramaswamy, chief executive of Snowflake, framed the issue starkly, arguing that reliability is no longer just an IT metric but a business imperative as systems become more distributed, dynamic and autonomous.

The challenge is scale. AI driven applications generate unprecedented volumes of telemetry data, often measured in terabytes or petabytes. Traditional observability tools were not designed for this volume or complexity, leading many organisations to rely on partial data and short retention windows to control costs. The result is limited visibility precisely when systems become harder to understand and diagnose.

Observe was built from its inception on Snowflake, and the acquisition brings its observability platform directly into the Snowflake environment. Central to this is Observe’s AI powered Site Reliability Engineer, which uses a unified context graph to correlate logs, metrics and traces. By combining this with Snowflake’s high fidelity data platform, the companies say teams can move from reactive monitoring to proactive, automated troubleshooting, resolving production issues significantly faster.

Data platforms and observability begin to merge

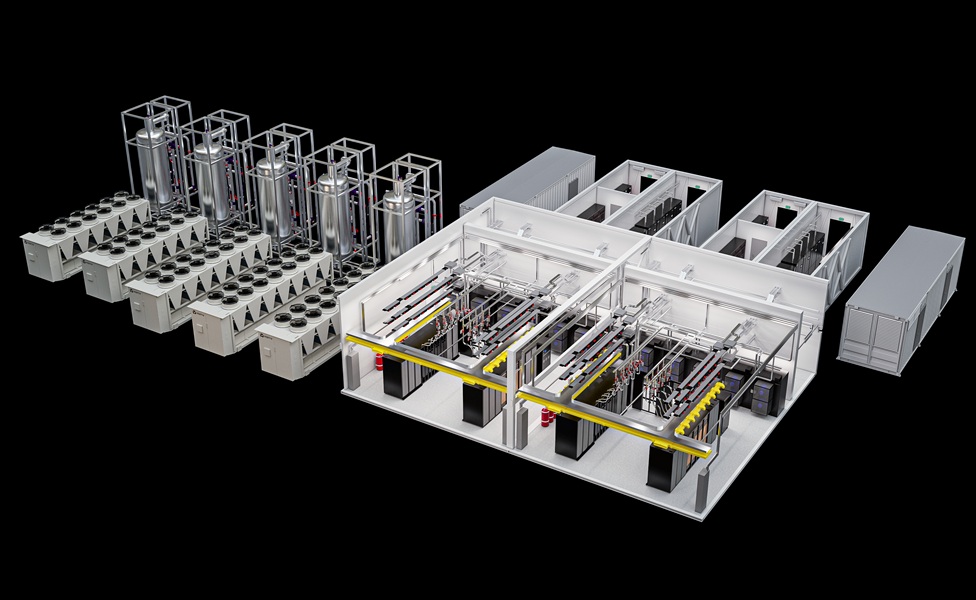

A notable aspect of the deal is its emphasis on open standards. Snowflake says the combined platform will be built on Apache Iceberg and OpenTelemetry, technologies it has actively contributed to. The aim is to create a unified architecture capable of handling massive telemetry volumes using object storage and elastic compute, rather than specialised and costly observability infrastructure.

This approach reflects a growing belief that observability data should be treated as first class enterprise data. By bringing telemetry into the same platform as business and analytical data, organisations can apply consistent governance, analytics and AI techniques across their entire data estate. The boundaries between data platforms and observability tools, once sharply defined, are starting to blur.

Jeremy Burton, chief executive of Observe, described observability as fundamentally a data problem and positioned the acquisition as a natural extension of Snowflake’s platform. As AI reshapes how applications are built, he argued, the bottleneck is shifting away from writing code towards operating and troubleshooting complex systems in production.

Industry analysts see the move as part of a wider correction. Sanjeev Mohan, principal analyst at SanjMo, said the cost challenges of observability stem from treating telemetry as special purpose data requiring bespoke infrastructure. He argued that the industry is moving towards bringing observability data into modern data platforms, where it can benefit from lakehouse economics and integrated AI capabilities.

Preparing for autonomous systems at scale

Beyond immediate operational gains, the acquisition points to a longer term shift in enterprise architecture. As AI agents take on more responsibility and operate with greater autonomy, the ability to observe, understand and intervene in their behaviour becomes critical. Sampling data or relying on delayed insights may no longer be sufficient.

Snowflake says that, once the acquisition closes, it will deepen its focus on helping customers build and operate reliable agents and applications. Observe’s developer oriented approach is expected to complement Snowflake’s existing workload engines by providing real time enterprise context and AI assisted root cause analysis.

The deal also expands Snowflake’s presence in the IT operations management software market, which Gartner estimates reached $51.7 billion in 2024 after growing nine per cent year on year. That growth reflects the increasing complexity of enterprise systems and the rising cost of operational failure.

Subject to regulatory approval, the acquisition underscores a simple but consequential shift. As AI systems scale, the differentiator is no longer just what they can do, but how reliably they can be run. In that sense, observability is moving from a supporting role to the centre of the enterprise AI conversation.